#Shhhh, they’re listening – inside the coming voice-profiling revolution

Table of Contents

“#Shhhh, they’re listening – inside the coming voice-profiling revolution”

This hypothetical situation may sound as if it’s from some distant future. But automated voice-guided marketing activities like this are happening all the time.

If you hear “This call is being recorded for training and quality control,” it isn’t just the customer service representative they’re monitoring.

It can be you, too.

When conducting research for my forthcoming book, “The Voice Catchers: How Marketers Listen In to Exploit Your Feelings, Your Privacy, and Your Wallet,” I went through over 1,000 trade magazine and news articles on the companies connected to various forms of voice profiling. I examined hundreds of pages of U.S. and EU laws applying to biometric surveillance. I analyzed dozens of patents. And because so much about this industry is evolving, I spoke to 43 people who are working to shape it.

It soon became clear to me that we’re in the early stages of a voice-profiling revolution that companies see as integral to the future of marketing.

Thanks to the public’s embrace of smart speakers, intelligent car displays and voice-responsive phones – along with the rise of voice intelligence in call centers – marketers say they are on the verge of being able to use AI-assisted vocal analysis technology to achieve unprecedented insights into shoppers’ identities and inclinations. In doing so, they believe they’ll be able to circumvent the errors and fraud associated with traditional targeted advertising.

Not only can people be profiled by their speech patterns, but they can also be assessed by the sound of their voices – which, according to some researchers, is unique and can reveal their feelings, personalities and even their physical characteristics.

Flaws in targeted advertising

Top marketing executives I interviewed said that they expect their customer interactions to include voice profiling within a decade or so.

Part of what attracts them to this new technology is a belief that the current digital system of creating unique customer profiles – and then targeting them with personalized messages, offers and ads – has major drawbacks.

A simmering worry among internet advertisers, one that burst into the open during the 2010s, is that customer data often isn’t up to date, profiles may be based on multiple users of a device, names can be confused and people lie.

Advertisers are also uneasy about ad blocking and click fraud, which happens when a site or app uses bots or low-paid workers to click on ads placed there so that the advertisers have to pay up.

These are all barriers to understanding individual shoppers.

Voice analysis, on the other hand, is seen as a solution that makes it nearly impossible for people to hide their feelings or evade their identities.

Building out the infrastructure

Most of the activity in voice profiling is happening in customer support centers, which are largely out of the public eye.

But there are also hundreds of millions of Amazon Echoes, Google Nests and other smart speakers out there. Smartphones also contain such technology.

All are listening and capturing people’s individual voices. They respond to your requests. But the assistants are also tied to advanced machine learning and deep neural network programs that analyze what you say and how you say it.

Amazon and Google – the leading purveyors of smart speakers outside China – appear to be doing little voice analysis on those devices beyond recognizing and responding to individual owners. Perhaps they fear that pushing the technology too far will, at this point, lead to bad publicity.

Nevertheless, the user agreements of Amazon and Google – as well as Pandora, Bank of America and other companies that people access routinely via phone apps – give them the right to use their digital assistants to understand you by the way you sound. Amazon’s most public application of voice profiling so far is its Halo wristband, which claims to know the emotions you’re conveying when you talk to relatives, friends and employers.

The company assures customers it doesn’t use Halo data for its own purposes. But it’s clearly a proof of concept – and a nod toward the future.

Patents point to the future

The patents from these tech companies offer a vision of what’s coming.

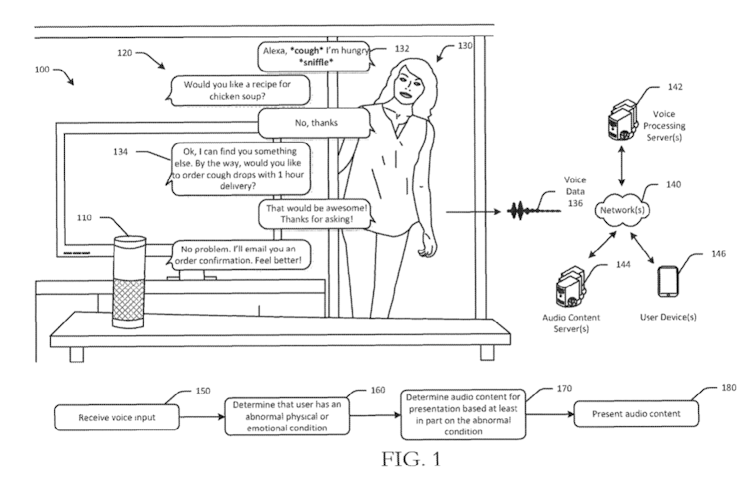

In one Amazon patent, a device with the Alexa assistant picks up a woman’s speech irregularities that imply a cold through using “an analysis of pitch, pulse, voicing, jittering, and/or harmonicity of a user’s voice, as determined from processing the voice data.” From that conclusion, Alexa asks if the woman wants a recipe for chicken soup. When she says no, it offers to sell her cough drops with one-hour delivery.

Another Amazon patent suggests an app to help a store salesperson decipher a shopper’s voice to plumb unconscious reactions to products. The contention is that how people sound allegedly does a better job indicating what people like than their words.

And one of Google’s proprietary inventions involves tracking family members in real time using special microphones placed throughout a home. Based on the pitch of voice signatures, Google circuitry infers gender and age information – for example, one adult male and one female child – and tags them as separate individuals.

The company’s patent asserts that over time the system’s “household policy manager” will be able to compare life patterns, such as when and how long family members eat meals, how long the children watch television, and when electronic game devices are working – and then have the system suggest better eating schedules for the kids, or offer to control their TV viewing and game playing.

Seductive surveillance

In the West, the road to this advertising future starts with firms encouraging users to give them permission to gather voice data. Firms gain customers’ permission by enticing them to buy inexpensive voice technologies.

When tech companies have further developed voice analysis software – and people have become increasingly reliant on voice devices – I expect the companies to begin widespread profiling and marketing based on voice data. Hewing to the letter if not the spirit of whatever privacy laws exist, the companies will, I expect, forge ahead into their new incarnations, even if most of their users joined before this new business model existed.

This classic bait and switch marked the rise of both Google and Facebook. Only when the numbers of people flocking to these sites became large enough to attract high-paying advertisers did their business models solidify around selling ads personalized to what Google and Facebook knew about their users.

By then, the sites had become such important parts of their users’ daily activities that people felt they couldn’t leave, despite their concerns about data collection and analysis that they didn’t understand and couldn’t control.

This strategy is already starting to play out as tens of millions of consumers buy Amazon Echoes at giveaway prices.

[Insight, in your inbox each day.You can get it with The Conversation’s email newsletter.]The dark side of voice profiling

Here’s the catch: It’s not clear how accurate voice profiling is, especially when it comes to emotions.

It is true, according to Carnegie Mellon voice recognition scholar Rita Singh, that the activity of your vocal nerves is connected to your emotional state. However, Singh told me that she worries that with the easy availability of machine-learning packages, people with limited skills will be tempted to run shoddy analyses of people’s voices, leading to conclusions that are as dubious as the methods.

She also argues that inferences that link physiology to emotions and forms of stress may be culturally biased and prone to error. That concern hasn’t deterred marketers, who typically use voice profiling to draw conclusions about individuals’ emotions, attitudes and personalities.

While some of these advances promise to make life easier, it’s not difficult to see how voice technology can be abused and exploited. What if voice profiling tells a prospective employer that you’re a bad risk for a job that you covet or desperately need? What if it tells a bank that you’re a bad risk for a loan? What if a restaurant decides it won’t take your reservation because you sound low class, or too demanding?

Consider, too, the discrimination that can take place if voice profilers follow some scientists’ claims that it is possible to use an individual’s vocalizations to tell the person’s height, weight, race, gender and health.

People are already subjected to different offers and opportunities based on the personal information companies have collected. Voice profiling adds an especially insidious means of labeling. Today, some states such as Illinois and Texas require companies to ask for permission before conducting analysis of vocal, facial or other biometric features.

But other states expect people to be aware of the information that’s collected about them from the privacy policies or terms of service – which means they rarely will. And the federal government hasn’t enacted a sweeping marketing surveillance law.

With the looming widespread adoption of voice analysis technology, it’s important for government leaders to adopt policies and regulations that protect the personal information revealed by the sound of a person’s voice.

One proposal: While the use of voice authentication – or using a person’s voice to prove their identity – could be allowed under certain carefully regulated circumstances, all voice profiling should be prohibited in marketers’ interactions with individuals. This prohibition should also apply to political campaigns and to government activities without a warrant.

That seems like the best way to ensure that the coming era of voice profiling is constrained before it becomes too integrated into daily life and too pervasive to control.![]()

This article by Joseph Turow, Robert Lewis Shayon Professor of Media Systems & Industries, University of Pennsylvania, is republished from The Conversation under a Creative Commons license. Read the original article.

If you liked the article, do not forget to share it with your friends. Follow us on Google News too, click on the star and choose us from your favorites.

For forums sites go to Forum.BuradaBiliyorum.Com

If you want to read more like this article, you can visit our Technology category.