#Everything you need to know about model-free and model-based reinforcement learning

Table of Contents

“Everything you need to know about model-free and model-based reinforcement learning”

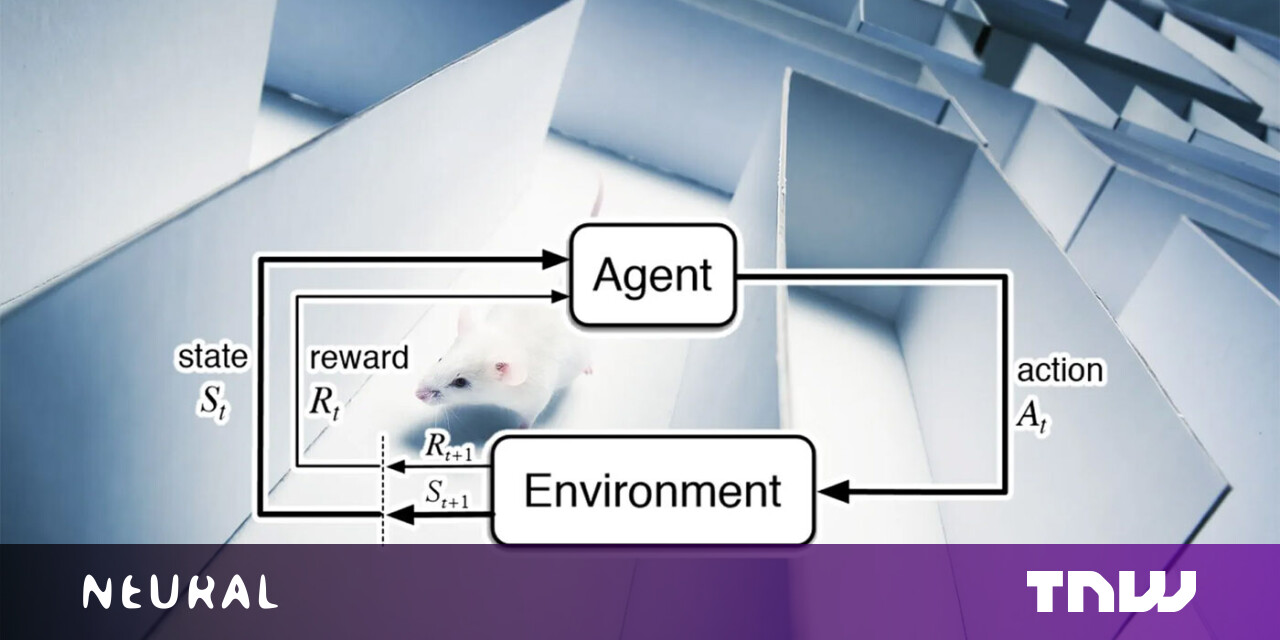

There are many different types of reinforcement learning algorithms, but two main categories are “model-based” and “model-free” RL. They are both inspired by our understanding of learning in humans and animals.

Nearly every book on reinforcement learning contains a chapter that explains the differences between model-free and model-based reinforcement learning. But seldom are the biological and evolutionary precedents discussed in books about reinforcement learning algorithms for computers.

I found a very interesting explanation of model-free and model-based RL in The Birth of Intelligence, a book that explores the evolution of intelligence. In a conversation with TechTalks, Daeyeol Lee, neuroscientist and author of The Birth of Intelligence, discussed different modes of reinforcement learning in humans and animals, AI and natural intelligence, and future directions of research.

American psychologist Edward Thorndike proposed the “law of effect,” which became the basis for model-free reinforcement learning

In the late nineteenth century, psychologist Edward Thorndike proposed the “law of effect,” which states that actions with positive effects in a particular situation become more likely to occur again in that situation, and responses that produce negative effects become less likely to occur in the future.

Thorndike explored the law of effect with an experiment in which he placed a cat inside a puzzle box and measured the time it took for the cat to escape it. To escape, the cat had to manipulate a series of gadgets such as strings and levers. Thorndike observed that as the cat interacted with the puzzle box, it learned the behavioral responses that could help it escape. Over time, the cat became faster and faster at escaping the box. Thorndike concluded that the cat learned from the reward and punishments that its actions provided.

The law of effect later paved the way for behaviorism, a branch of psychology that tries to explain human and animal behavior in terms of stimuli and responses.

The law of effect is also the basis for model-free reinforcement learning. In model-free reinforcement learning, an agent perceives the world, takes an action, and measures the reward. The agent usually starts by taking random actions and gradually repeats those that are associated with more rewards.

“You basically look at the state of the world, a snapshot of what the world looks like, and then you take an action. Afterward, you increase or decrease the probability of taking the same action in the given situation depending on its outcome,” Lee said. “That’s basically what model-free reinforcement learning is. The simplest thing you can imagine.”

In model-free reinforcement learning, there’s no direct knowledge or model of the world. The RL agent must directly experience every outcome of each action through trial and error.

American psychologist Edward C. Tolman proposed the idea of “latent learning,” which became the basis of model-based reinforcement learning

Thorndike’s law of effect was prevalent until the 1930s, when Edward Tolman, another psychologist, discovered an important insight while exploring how fast rats could learn to navigate mazes. During his experiments, Tolman realized that animals could learn things about their environment without reinforcement.

For example, when a rat is let loose in a maze, it will freely explore the tunnels and gradually learn the structure of the environment. If the same rat is later reintroduced to the same environment and is provided with a reinforcement signal, such as finding food or searching for the exit, it can reach its goal much quicker than animals who did not have the opportunity to explore the maze. Tolman called this “latent learning.”

Latent learning enables animals and humans to develop a mental representation of their world and simulate hypothetical scenarios in their minds and predict the outcome. This is also the basis of model-based reinforcement learning.

“In model-based reinforcement learning, you develop a model of the world. In terms of computer science, it’s a transition probability, how the world goes from one state to another state depending on what kind of action you produce in it,” Lee said. “When you’re in a given situation where you’ve already learned the model of the environment previously, you’ll do a mental simulation. You’ll basically search through the model you’ve acquired in your brain and try to see what kind of outcome would occur if you take a particular series of actions. And when you find the path of actions that will get you to the goal that you want, you’ll start taking those actions physically.”

The main benefit of model-based reinforcement learning is that it obviates the need for the agent to undergo trial-and-error in its environment. For example, if you hear about an accident that has blocked the road you usually take to work, model-based RL will allow you to do a mental simulation of alternative routes and change your path. With model-free reinforcement learning, the new information would not be of any use to you. You would proceed as usual until you reached the accident scene, and then you would start updating your value function and start exploring other actions.

Model-based reinforcement learning has especially been successful in developing AI systems that can master board games such as chess and Go, where the environment is deterministic.

In some cases, creating a decent model of the environment is either not possible or too difficult. And model-based reinforcement learning can potentially be very time-consuming, which can prove to be dangerous or even fatal in time-sensitive situations.

“Computationally, model-based reinforcement learning is a lot more elaborate. You have to acquire the model, do the mental simulation, and you have to find the trajectory in your neural processes and then take the action,” Lee said.

Lee added, however, that model-based reinforcement learning does not necessarily have to be more complicated than model-free RL.

“What determines the complexity of model-free RL is all the possible combinations of stimulus set and action set,” he said. “As you have more and more states of the world or sensor representation, the pairs that you’re going to have to learn between states and actions are going to increase. Therefore, even though the idea is simple, if there are many states and those states are mapped to different actions, you’ll need a lot of memory.”

On the contrary, in model-based reinforcement learning, the complexity will depend on the model you build. If the environment is really complicated but can be modeled with a relatively simple model that can be acquired quickly, then the simulation would be much simpler and cost-efficient.

“And if the environment tends to change relatively frequently, then rather than trying to relearn the stimulus-action pair associations whenever the world changes, you can have a much more efficient outcome if you’re using model-based reinforcement learning,” Lee said.

Basically, neither model-based nor model-free reinforcement learning is a perfect solution. And wherever you see a reinforcement learning system tackling a complicated problem, there’s a likely chance that it is using both model-based and model-free RL—and possibly more forms of learning.

Research in neuroscience shows that humans and animals have multiple forms of learning, and the brain constantly switches between these modes depending on the certainty it has on them at any given moment.

“If the model-free RL is working really well and it is accurately predicting the reward all the time, that means there’s less uncertainty with model-free and you’re going to use it more,” Lee said. “And on the contrary, if you have a really accurate model of the world and you can do the mental simulations of what’s going to happen every moment of time, then you’re more likely to use model-based RL.”

In recent years, there has been growing interest in creating AI systems that combine multiple modes of reinforcement learning. Recent research by scientists at UC San Diego shows that combining model-free and model-based reinforcement learning achieves superior performance in control tasks.

“If you look at a complicated algorithm like AlphaGo, it has elements of both model-free and model-based RL,” Lee said. “It learns the state values based on board configurations, and that is basically model-free RL, because you’re trying values depending on where all the stones are. But it also does forward search, which is model-based.”

But despite remarkable achievements, progress in reinforcement learning is still slow. As soon as RL models are faced with complex and unpredictable environments, their performance starts to degrade. For example, creating a reinforcement learning system that played Dota 2 at championship level required tens of thousands of hours of training, a feat that is physically impossible for humans. Other tasks such as robotic hand manipulation also require huge amounts of training and trial-and-error.

Part of the reason reinforcement learning still struggles with efficiency is the gap remaining in our knowledge of learning in humans and animals. And we have much more than just model-free and model-based reinforcement learning, Lee believes.

“I think our brain is a pandemonium of learning algorithms that have evolved to handle many different situations,” he said.

In addition to constantly switching between these modes of learning, the brain manages to maintain and update them all the time, even when they are not actively involved in decision-making.

“When you have multiple learning algorithms, they become useless if you turn some of them off. Even if you’re relying on one algorithm—say model-free RL—the other algorithms must continue to run. I still have to update my world model rather than keep it frozen because if I don’t, several hours later, when I realize that I need to switch to the model-based RL, it will be obsolete,” Lee said.

Some interesting work in AI research shows how this might work. A recent technique inspired by psychologist Daniel Kahneman’s System 1 and System 2 thinking shows that maintaining different learning modules and updating them in parallel helps improve the efficiency and accuracy of AI systems.

Another thing that we still have to figure out is how to apply the right inductive biases in our AI systems to make sure they learn the right things in a cost-efficient way. Billions of years of evolution have provided humans and animals with the inductive biases needed to learn efficiently and with as little data as possible.

“The information that we get from the environment is very sparse. And using that information, we have to generalize. The reason is that the brain has inductive biases and has biases that can generalize from a small set of examples. That is the product of evolution, and a lot of neuroscientists are getting more interested in this,” Lee said.

However, while inductive biases might be easy to understand for an object recognition task, they become a lot more complicated for abstract problems such as building social relationships.

“The idea of inductive bias is quite universal and applies not just to perception and object recognition but to all kinds of problems that an intelligent being has to deal with,” Lee said. “And I think that is in a way orthogonal to the model-based and model-free distinction because it’s about how to build an efficient model of the complex structure based on a few observations. There’s a lot more that we need to understand.”

If you liked the article, do not forget to share it with your friends. Follow us on Google News too, click on the star and choose us from your favorites.

For forums sites go to Forum.BuradaBiliyorum.Com

If you want to read more like this article, you can visit our Technology category.