#Nvidia’s new AI magic turns 2D photos into 3D graphics

Table of Contents

“Nvidia’s new AI magic turns 2D photos into 3D graphics”

After converting 2D images into 3D scenes, models, and videos, the company has turned its focus to editing.

The GPU giant today unveiled a new AI method that transforms still photos into 3D objects that creators can alter with ease.

Dubbed 3D MoMa, the technique could give game studios a simple way to alter images and scenes. This typically relies on time-consuming photogrammetry, which takes measurements from photos.

3D MoMa speeds up the task through inverse rendering. This process uses AI to estimate a scene’s physical attributes — from geometry to lighting — by analyzing still images. The pictures are then reconstructed in a realistic 3D form.

David Luebke, Nvidia’s VP of graphics research, describes the technique as “a holy grail unifying computer vision and computer graphics.”

“By formulating every piece of the inverse rendering problem as a GPU-accelerated differentiable component, the NVIDIA 3D MoMa rendering pipeline uses the machinery of modern AI and the raw computational horsepower of NVIDIA GPUs to quickly produce 3D objects that creators can import, edit, and extend without limitation in existing tools,” said Lubeke.

3D MoMa generates objects as triangle meshes — a format that’s straightforward to edit with widely-used tools. The models are created within an hour on a single NVIDIA Tensor Core GPU.

Materials can then be overlaid on the meshes like skins. The lighting of the scene is also predicted, which allows creators to modify its effects on the objects.

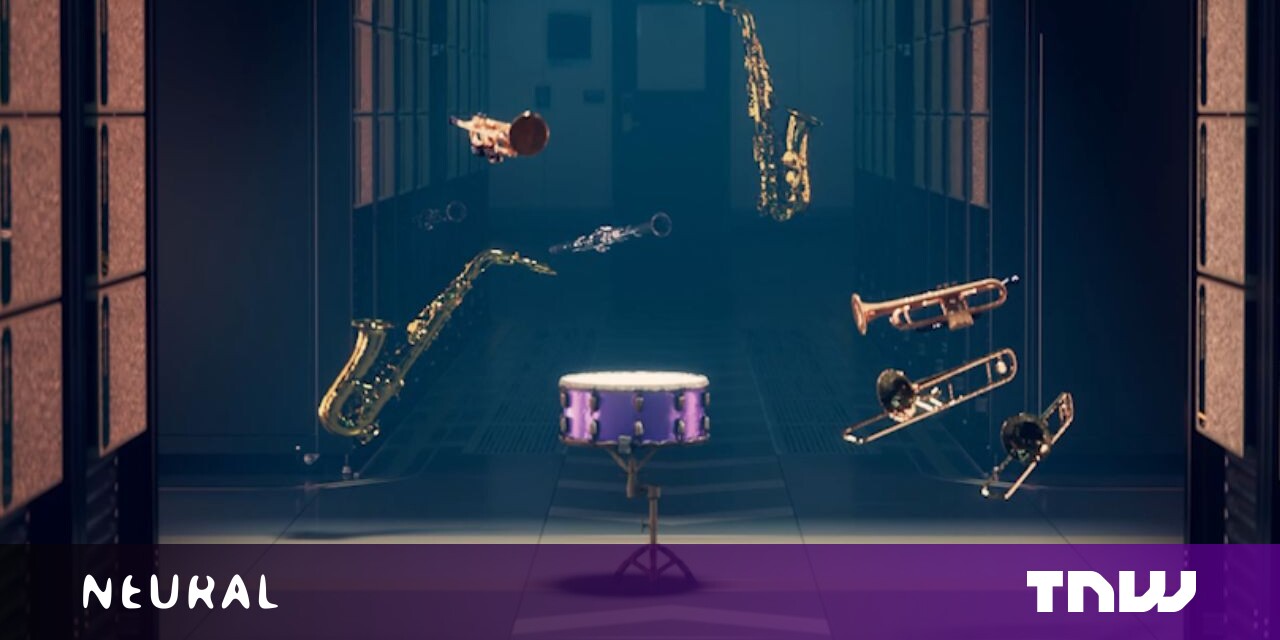

3D MoMa was showcased at this week’s Computer Vision and Pattern Recognition Conference (CVPR) in New Orleans. In homage to the birthplace of jazz, Nvidia researchers used the technique to visually render the musical genre.

The team first collected hundreds of pictures of trumpet, trombones, saxophones, drums, and clarinets. Next, 3D MoMa reconstructed the images into 3D representations.

The instruments were then edited and given new materials. The trumpet, for instance, was transformed from cheap plastic to lavish gold.

The newly-edited instruments were then ready to be placed into any virtual scene. Nvidia dropped them into a Cornell box, which is used to test rendering quality.

The company says all the instruments reacted to light as they would in the real world, from the brass instruments reflecting brightly to the drum skins absorbing light.

Finally, the 3D objects were rendered in an animated scene.

3D MoMa remains under development, but Nvidia believes it could enable game devs and other designers to quickly modify 3D objects — and then add them to any virtual scene.

That could also ease our metamorphosis into metaverse forms.

You can read the study paper behind 3D MoMa here.

If you liked the article, do not forget to share it with your friends. Follow us on Google News too, click on the star and choose us from your favorites.

For forums sites go to Forum.BuradaBiliyorum.Com

If you want to read more like this article, you can visit our Technology category.