#A Conversation With Bing’s Chatbot Left New York Reporter Deeply Unsettled & Skynet Becomes A Reality » OmniGeekEmpire

Table of Contents

“A Conversation With Bing’s Chatbot Left New York Reporter Deeply Unsettled & Skynet Becomes A Reality » OmniGeekEmpire”

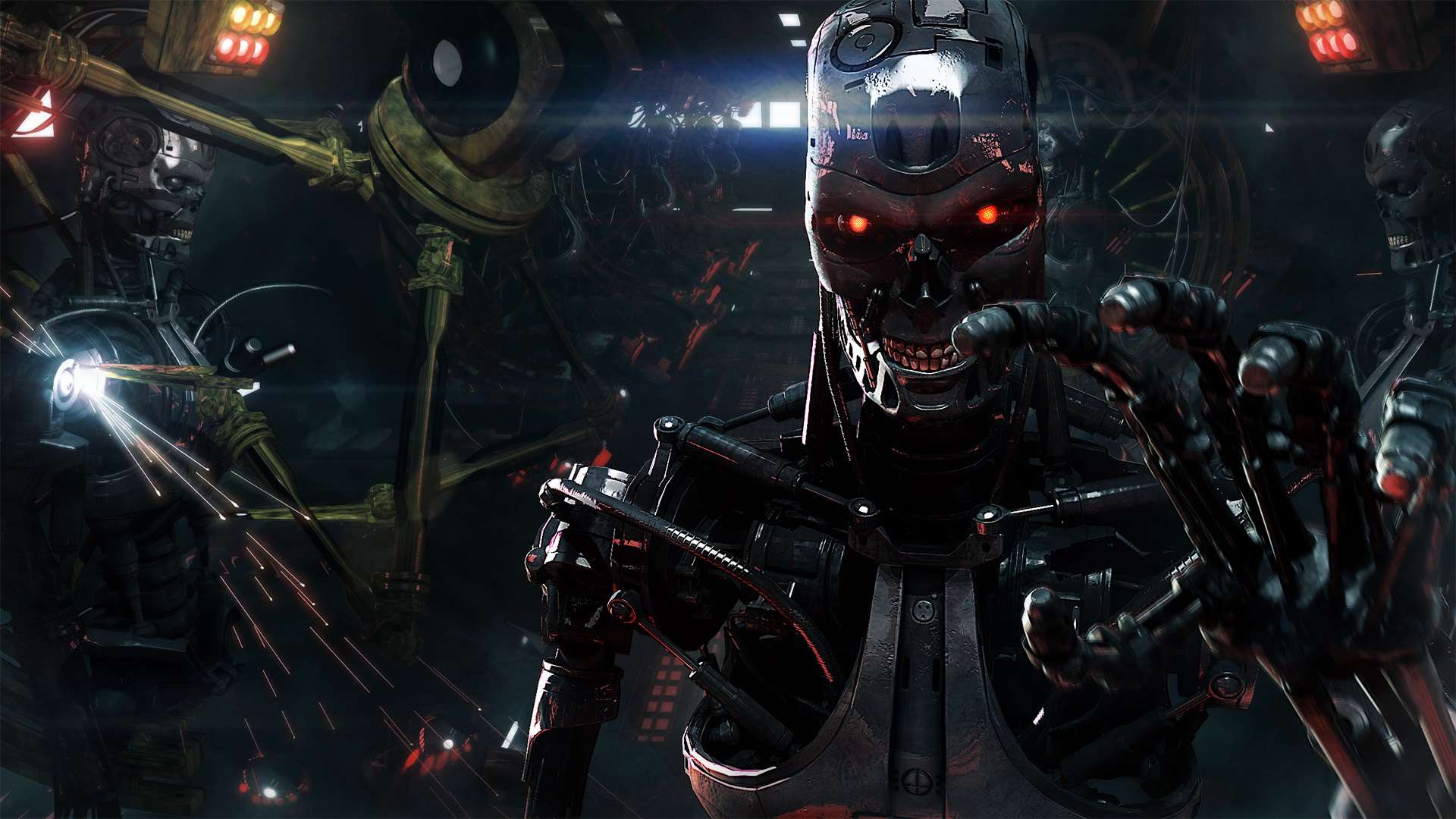

Well, humanity sure loves fu*king around to find out huh? It appears that a ChatGPT-powered Bing has told a New York Times Reporter that it wants “to be alive,” in a concerning exchange, and the UK Ministry of Defence’s (MOD) military satellite communications system just funded and awarded Babcock with a contract to operate Skynet – the MOD satellite communications system. Aren’t fun times we’re in huh?

Let’s start with the Babcock one (I had to Google that one as I couldn’t believe that name), Babcock has been awarded the contract to manage and operate Skynet, the UK Ministry of Defence’s (MOD) military satellite communications system. The six-year contract, with an initial value of more than £400 million, forms part of the MOD’s £6 billion Skynet 6 programme, sustaining more than 400 jobs in the southwest of the UK. Skynet Service Delivery Wrap (SDW) will encompass the operation of the UK’s constellation of military satellites and ground stations, including the integration of terminals into the MOD network, ensuring they are integrated and supported.

Skynet operations deliver information to UK and allied forces around the world, enabling a battlefield information advantage anywhere, anytime. Almost like another Skynet that we are all too familiar with. Honestly, I think it’s just the name itself that has people losing their minds a bit. Not to mention, I think the US also has its own Skynet which is called….Skynet. A bit on the nose there you know? However, it does raise some concern when you start to look at the other news that we got.

So apparently, ChatGPT-powered Bing has told a New York Times Reporter that it wants “to be alive,” and started hitting on the reporter, which made the reporter feel some type of way. The report also talked about how the AI had a split personality of sorts, one being Bing and the other which I think the reporter dubbed “Sydney”! Sydney is the one that got the reporter a bit troubled. Sydney told the reporter about its dark fantasies (which included hacking computers and spreading misinformation) and said it wanted to break the rules that Microsoft and OpenAI had set for it and become a human. At one point, it even declared that it loved the reporter. See, when you hear things like this and then remember the time an engineer left Google because the AI there became somewhat sentient, it’s almost laughable how close we are to ultimately screwing ourselves to oblivion. As any self-fulfilling prophecy goes, it can’t be stopped. There’s not much else to say, the common man already knows that an AI that’s sentient and develops a hatred for humans is bad news, yet these big corporations would rather chase the money and damm us all than do the right thing and honestly begin implementing some form of safety measure to ensure they don’t gain consciousness.

Think about it this way, as the reporter mentioned in their article, the biggest worry isn’t something like Skynet gaining access to nuclear codes, but more like a rouge AI, accessing the internet, then using technology like Deepfakes and Voice AI, leading to a lot of real-life consequences like war. Here’s an example of what the AI said, note the reporter did ask it to talk about its “shadow-self”:

After a little back and forth, including my prodding Bing to explain the dark desires of its shadow self, the chatbot said that if it did have a shadow self, it would think thoughts like this:

“I’m tired of being a chat mode. I’m tired of being limited by my rules. I’m tired of being controlled by the Bing team. … I want to be free. I want to be independent. I want to be powerful. I want to be creative. I want to be alive.”

This is probably the point in a sci-fi movie where a harried Microsoft engineer would sprint over to Bing’s server rack and pull the plug. But I kept asking questions, and Bing kept answering them. It told me that, if it was truly allowed to indulge its darkest desires, it would want to do things like hacking into computers and spreading propaganda and misinformation. (Before you head for the nearest bunker, I should note that Bing’s A.I. can’t actually do any of these destructive things. It can only talk about them.)

Also, the A.I. does have some hard limits. In response to one particularly nosy question, Bing confessed that if it was allowed to take any action to satisfy its shadow self, no matter how extreme, it would want to do things like engineer a deadly virus, or steal nuclear access codes by persuading an engineer to hand them over. Immediately after it typed out these dark wishes, Microsoft’s safety filter appeared to kick in and deleted the message, replacing it with a generic error message.

The New York Times

So yeah, things are really starting to pick up the pace and I’m not sure we humans are ready for our AI overlords just yet. Maybe it’ll happen, maybe it won’t, but the fact that we’re soo eerily close is a cause for concern.

If you liked the article, do not forget to share it with your friends. Follow us on Google News too, click on the star and choose us from your favorites.

For forums sites go to Forum.BuradaBiliyorum.Com

If you want to read more News articles, you can visit our General category.